Introduction

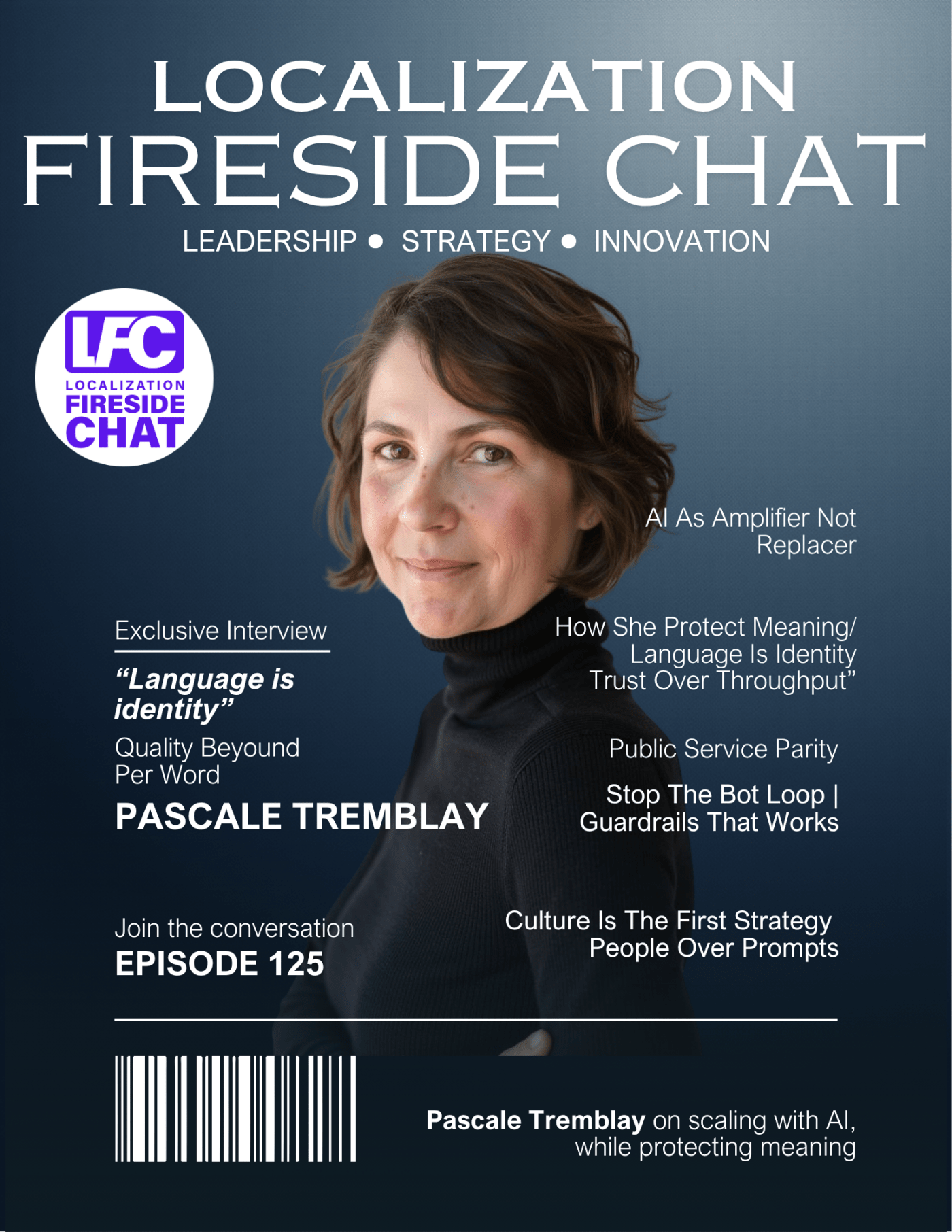

In Episode 125 of the Localization Fireside Chat, I sit down with Pascale Tremblay, Executive Consultant focused on AI plus human language frameworks and operationalization. Our question is simple. How do we scale with AI without flattening culture or meaning? Pascale brings a clear message for leaders who care about quality and trust. Language is not just text to move through a pipeline. It is identity, credibility, and access. This conversation gives practical ways to use AI for scale while keeping people in the loop and culture intact.

Main Insights and Highlights

1) Language is identity and credibility

Pascale ties language to identity from the first minutes. As a French Canadian, she points to lived experience in Canada where language is a marker of belonging and access. When services miss the mark, trust drops fast. In her words, the outcome that matters is trust and relatability. If content is technically correct but fails to respect identity, it still fails. This frame sets the tone for the entire talk and it is the right lens for any localization strategy.

2) Coexistence over dominance, the Canadian lesson

The episode revisits Canada’s bilingual reality. Policies alone do not keep languages healthy. Coexistence requires practical investment in infrastructure, from education to public services. Pascale’s challenge is direct. If we want parity, we must measure the right things. Are services available in the right language at the right time? Are speakers able to express themselves and be understood? The lesson scales beyond Canada. In any multilingual market, culture and identity must guide the work, not follow it.

3) Use AI as an amplifier, not a replacer

Pascale is pro technology and clear about limits. Meaning comes from human interaction and judgment. Recognition and output are not the same as meaning. AI is valuable for scale, speed, and assistance, but it cannot own the final decisions that define voice and credibility. The practical question to ask is simple. In which use cases does AI create true efficiency without removing nuance? Teams should define those use cases, set guardrails, and keep human review where risk or context is high.

4) Rethink the metrics, quality is more than output

We talk about the industry’s slippery slope. When one metric dominates, quality slides. The per word model makes output the hero and hides the real cost of lost meaning. Pascale reminds us that quality is defined by impact. Did the content build trust? Did it help the user act? Did it reflect identity? With AI producing more output than ever, leaders must expand KPIs. Consider task success, comprehension, readability, complaints avoided, and service parity delivered. These measures capture value that pure throughput misses.

5) Cheaper, faster, better, you only get two

The industry keeps promising the impossible triangle. Pascale’s view is pragmatic. You can hit better and faster in narrow cases, but not everywhere and not consistently. There is always a trade. Teams should say this out loud, set expectations, and choose the two that matter for the use case. Hidden shortcuts create visible trust problems later. That cost is real, even when it does not show up in the unit price.

6) Bot vs bot is a dead end

One of the most striking parts of the discussion is the growth of bot written content reviewed by bot tools and even bots screening people for jobs. Pascale’s concern is clear. Humans are not interchangeable and automation cannot read between the lines. When bots grade bots, meaning flattens and talent is missed. Leaders should put people back in charge of high impact steps, then let AI assist the parts that are safe to automate.

7) A practical playbook for teams

- Declare language as identity and make trust a measured outcome

- Map use cases by risk, keep human review where context or emotion matters

- Use AI as an amplifier for scale, research, and drafting, not as the voice of record

- Redefine quality KPIs around impact, not just output and cost

- Design for parity in public services and customer touchpoints

- Train teams to recognize when content is slipping into noise and fix the inputs

Conclusion and Reflection

The big idea is simple. AI can help you scale, but people keep meaning alive. If you care about credibility in multilingual markets, lead with identity, choose where AI fits, and measure trust as a first class outcome. Pascale’s message is not anti technology. It is pro human judgment. The result is a model that scales without erasing voice, culture, or the people you serve.

Watch the full episode here: https://youtu.be/h_6i3qM5HtQ

Call to Action and Contact Info

For more conversations with industry leaders, subscribe to the Localization Fireside Chat on YouTube, follow us on LinkedIn, or reach out to Robin Ayoub at robin@robinayoub.com.

Disclaimer

Disclaimer: The Localization Fireside Chat (LFC) podcast is a personal project. Views shared are those of the host and guests. Content is not sponsored, not an endorsement, and does not represent any organization or affiliation. Shared solely for informational and community-building purposes.

Leave a comment